Microsoft is joining calls for increased regulation of the artificial intelligence industry, including suggesting a new government agency to oversee laws and licensing of AI systems.

On Thursday, Microsoft shared a 40-page report that lays out a blueprint for regulating AI technologies, with suggestions like implementing a “government-led AI safety framework” and developing measures to deter the use of AI to deceive or defraud people. The report also calls for a new government agency to implement any laws or regulations passed to govern AI.

“The guardrails needed for AI require a broadly shared sense of responsibility and should not be left to technology companies alone,” said Microsoft President Brad Smith in the introduction to the report, titled “Governing AI: A Blueprint for the Future.”

See also: Microsoft Bing AI Chat Widgets: How to Get Them on iOS and Android

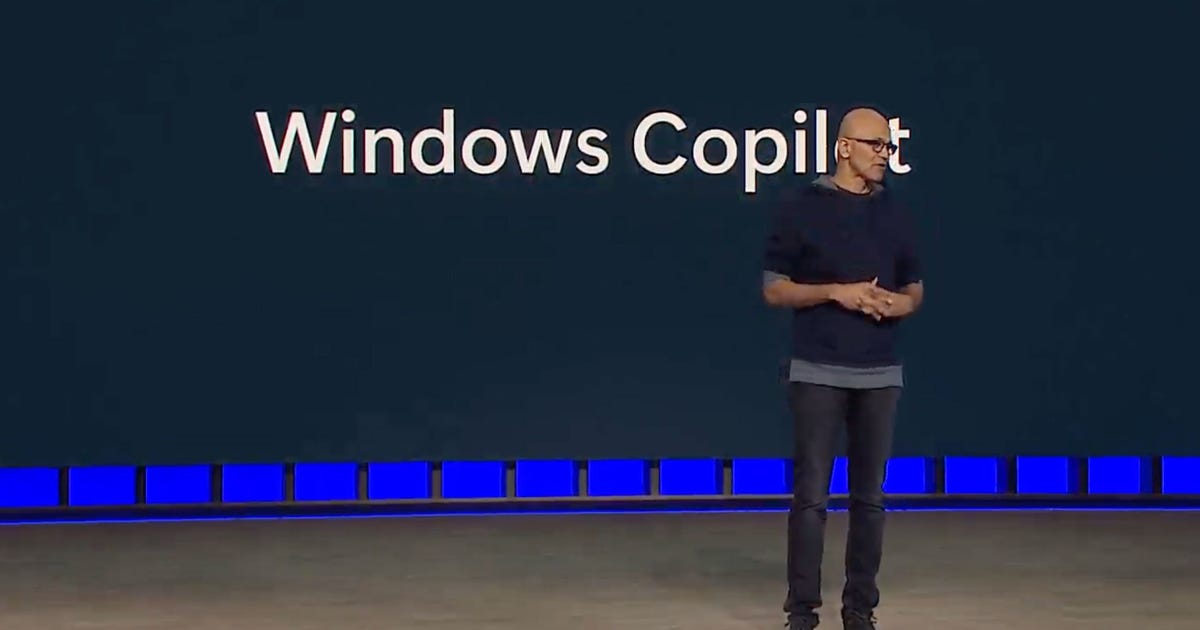

Microsoft has been rapidly rolling out generative AI tools and features for its software and other products that are powered by tech from OpenAI, the maker of ChatGPT. Microsoft has invested billions in OpenAI and also reached a deal to let the AI powerhouse use Bing search engine data to improve ChatGPT.

Microsoft’s call for increased regulation comes after OpenAI CEO Sam Altman expressed similar sentiments while testifying before Congress on May 16. The AI company’s leaders followed up with a blog post on Monday that said there will eventually need to be an international authority that can inspect, audit and restrict AI systems.

After OpenAI unveiled ChatGPT late last year, Microsoft helped fuel the current race to launch new AI tools with the release of a new AI-powered Bing search in February. Since then, we’ve seen a flood of generative AI-infused tools and features from Google, Adobe, Meta and others. While these tools have vast potential to help people on tasks big and small, they’ve also sparked concerns about the potential risks of AI.

In March, an open letter issued by the nonprofit Future of Life Institute called for a pause in development of AI systems more advanced than OpenAI’s GPT-4, cautioning they could “pose profound risks to society and humanity.” The letter was signed by more than 1,000 people, including Tesla CEO Elon Musk, Apple co-founder Steve Wozniak and experts in AI, computer science and other fields.

Microsoft acknowledged these risks in its report on Thursday, saying a regulatory framework is needed to anticipate and get ahead of potential problems.

“We need to acknowledge the simple truth that not all actors are well intentioned or well-equipped to address the challenges that highly capable models present,” reads the report. “Some actors will use AI as a weapon, not a tool, and others will underestimate the safety challenges that lie ahead.”

Editors’ note: CNET is using an AI engine to create some personal finance explainers that are edited and fact-checked by our editors. For more, see this post.